SQL Server A to Z – FILESTREAM

Data doesn’t always come in a nice, neat, relational format. Oftentimes it’s unstructured files like Word documents or images. But it still needs to be stored in some manner, right? In previous versions of SQL Server, you could store this kind of data in the database using varbinary or image columns. Another alternative was keep the unstructured files outside the data and just store the location of the files in the database. Each of these options has its shortcomings, be it performance, data consistency, code complexity or basic database maintenance.

Data doesn’t always come in a nice, neat, relational format. Oftentimes it’s unstructured files like Word documents or images. But it still needs to be stored in some manner, right? In previous versions of SQL Server, you could store this kind of data in the database using varbinary or image columns. Another alternative was keep the unstructured files outside the data and just store the location of the files in the database. Each of these options has its shortcomings, be it performance, data consistency, code complexity or basic database maintenance.

Filestream

With SQL 2008 came a new feature called FILESTREAM. FILESTREAM allows us to store this unstructured data in an NTFS filesystem while still treating it like part of the database. You can use basic T-Sql statements like SELECT, INSERT, and UPDATE against FILESTREAM data. And you can back up FILESTREAM data as part of the database. Pretty neat, huh?

Enabling FILESTREAM

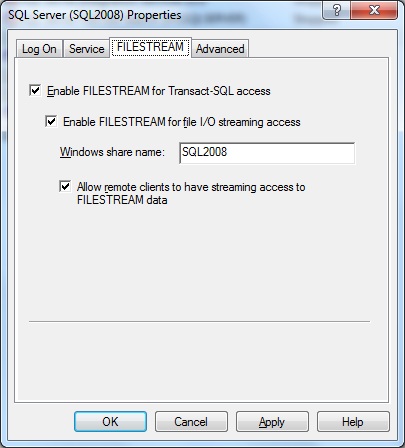

FILESTREAM can be enabled as part of the SQL Server installation. However, this is not the default, so unless you specifically configured it at install-time, it’s probably not enabled on your server. Don’t worry, though, FILESTREAM can be enabled relatively easily after installation. Open up SQL Server Configuration Manager, right-click on the SQL Server Service and select Properties. Click on the FILESTREAM tab. Select the appropriate checkboxes and provide a Share Name as shown below. Click OK.

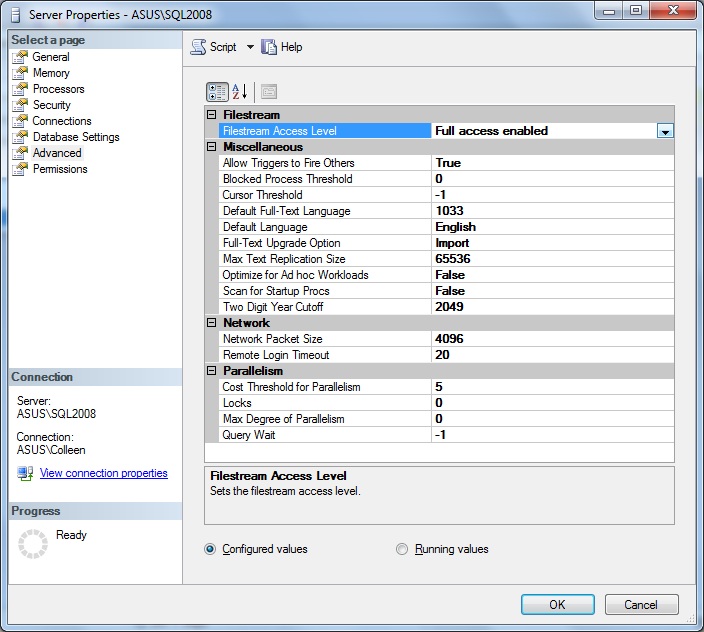

Next, in SSMS, right-click on the instance name and select Properties. Go to Advanced, and click the drop down menu for Filestream Access Level. Choose Transact-Sql Access Enabled or Full Access Enabled, and click OK.

FILESTREAM is now enabled for your SQL instance.

Using FILESTREAM

The first step in actually using FILESTREAM data is to create a FILESTREAM-enabled database. This is really just your typical CREATE DATABASE statement, with an additional filegroup. This will tell SQL Server where to store the unstructured data. SQL Server will create the folder specified, so make sure it doesn’t already exist. Using my example below, ensure the “filestream1” folder doesn’t already exist in “D:\data”. If it does, you’ll get an error.

CREATE DATABASE MyFSDatabase ON PRIMARY ( NAME = MyFSData1, FILENAME = 'D:\data\FSData1.mdf'), FILEGROUP FileStreamGroup1 CONTAINS FILESTREAM( NAME = MyFSData2, FILENAME = 'D:\data\filestream1') LOG ON ( NAME = MyFSLog1, FILENAME = 'D:\logs\FSLog1.ldf') GO

Now we’re ready to create a table containing FILESTREAM data.

USE MyFSDatabase GO CREATE TABLE MyPics ( ID UNIQUEIDENTIFIER ROWGUIDCOL NOT NULL UNIQUE, Name varchar(50), Picture VARBINARY(MAX) FILESTREAM NULL); GO

And we can insert data using simple INSERT statements.

INSERT INTO MyPics SELECT NEWID(), 'Penguins', CAST(bulkcolumn AS VARBINARY(MAX)) FROM OPENROWSET( BULK 'D:\Penguins.jpg',SINGLE_BLOB ) AS x

You can’t really view FILESTREAM data via T-Sql. If I try to SELECT the row I just inserted the Picture column will just be a hex string. So you’d need an application to handle retrieving and viewing the data. But that’s the developer’s job, right? 😉

My last blog post

Here at cledba.wordpress.com, that is.

I’m very excited to say that this blog is moving! Beginning next week, I will be blogging at ColleenMorrow.com. New address, new look, same awesome content! So update your feeds/bookmarks/etc., and I hope to see you there.

The new site is online now, so go check it out and let me know what you think.

Why are you still here? GO!

SQL Server A to Z – Encryption

Hierarchies. Asymmetric keys. Symmetric keys. Certificates. Algorithms. Authenticators. Encrypting your data can seem like a very daunting and confusing process. On the one hand, you want your data to be secure. But on the other hand, you still want good performance. And, no pressure, but fail to plan properly and your data becomes completely unreadable. Makes you want to jump right in, doesn’t it? Well take a breath. Relax. It’s not that bad. And depending on your needs and wants, you’ve got some options.

The Dark Ages

Encryption in SQL Server as first introduced in SQL 2005. It was implemented at the column level, and it required that the column be defined as varbinary, and searching on encrypted columns was limited. Add to that the fact that the developer/application had to handle the encryption and decryption of data via stored procedures and, well, you’ve got yourself quite the little nightmare. (Not quite on the nightmare scale of bubonic plague, but definitely on par with medicinal leeches.) This type of encryption definitely has its place; if you’re developing an app with limited sensitive data, for example. But for wholesale data encryption, it’s not the best option.

The Renaissance

SQL 2008 brought database encryption out of the dark ages with Transparent Data Encryption (TDE). With TDE, you can encrypt your entire database without having to make any object changes, without any code changes, and without any loss of functionality. Hence the whole “transparent” thing. And it’s relatively easy to implement.

Start by creating a master key. This key is used to protect private keys of certificates as well as asymmetric keys in the database.

USE master; GO CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'SuperStr0ngP@55w0rd!?';

The next step is to create a certificate that will protect the Database Encryption Key (DEK). The DEK is used to encrypt the data, so it’s kinda important that nothing happens to this certificate. Therefore, you want to make sure you back it up to a safe place.

CREATE CERTIFICATE TDECert WITH SUBJECT = 'TDE DEK Certificate';

BACKUP CERTIFICATE TDECert TO FILE = 'D:\securelocaton\TDECert.cer'

WITH PRIVATE KEY (

FILE = 'D:\securelocaton\TDEPrivateKey',

ENCRYPTION BY PASSWORD = 'SuperStr0ngP@55w0rd!?');

Now we need to create the DEK. Again, this tells SQL Server how to encrypt (and decrypt) the database.

USE AdventureWorks2008R2; GO CREATE DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_256 ENCRYPTION BY SERVER CERTIFICATE TDECert

Note the ALGORITHM clause. SQL Server lets you choose from a variety of encryption algorithms, depending on your needs. For more information on what algorithms are available go here.

The final step in the process is to turn on encryption in the database.

ALTER DATABASE AdventureWorks2008R2

SET ENCRYPTION ON;

GO

Once this command is executed, SQL Server starts a background process that scans through the database and encrypts the data. All this can go on while normal database activity takes place. Just don’t try to alter any datafiles while the encryption process is running. You can monitor its progress by querying sys.dm_database_encryption_keys.

SELECT db_name(database_id), encryption_state

FROM sys.dm_database_encryption_keys

Some things to keep in mind:

- Filestream data is not encrypted

- Database backups are also encrypted. Read that again, it’s important. When you restore an encrypted database, the certificate used to protect the DEK at time of the backup also needs to be present. Backup your certificates with your database!

- Data pages are decrypted when they are read from disk, and encrypted before being written. Therefore, data in memory is not encrypted. Data paged out to disk is also unencrypted.

- When a user database is encrypted using TDE, tempdb is also encrypted.

As you can see, TDE isn’t too scary. It’s definitely a step above the previous alternative. If you want more information on TDE or encryption in general, be sure to check out these resources:

SQL Server A to Z – DMV

Today’s episode of SQL Server A to Z is brought to you by the letter D. D is for Dynamic Management View (DMV). DMVs were introduced in SQL 2005 as a way to provide administrators with a window into what’s going on in SQL Server at any given moment. This can range from OS statistics, to information about replication, to what execution plans are currently in cache. Today I’ll give you a brief look at a few of the DMVs I use.

Today’s episode of SQL Server A to Z is brought to you by the letter D. D is for Dynamic Management View (DMV). DMVs were introduced in SQL 2005 as a way to provide administrators with a window into what’s going on in SQL Server at any given moment. This can range from OS statistics, to information about replication, to what execution plans are currently in cache. Today I’ll give you a brief look at a few of the DMVs I use.

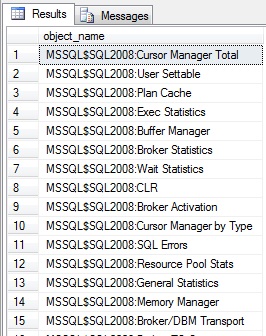

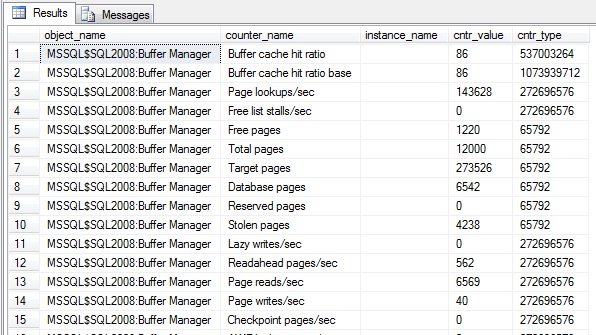

sys.dm_os_performance_counters

This view is like having Perfmon in T-Sql, you’ll see same SQL Server performance metrics in either. I happen to like Perfmon for gathering performance stats over a period of time, like when I’m getting a baseline for a server. But sometimes you just want a glimpse of how things are running right now, at this moment. Or maybe you want to see those stats next to a list of what processes are currently running in the database. That’s difficult, or impossible to do with Perfmon. So that’s when you’d use this DMV. Let’s take a look at the counters this DMV exposes.

SELECT distinct object_name from sys.dm_os_performance_counters

And if we drill down a little further:

SELECT * from sys.dm_os_performance_counters where object_name like '%Buffer Manager%'

And there you see the same counters for Buffer Manager that you’d see if you ran Perfmon. This is one of the DMVs I use in my script to see how SQL Server is using its memory;.

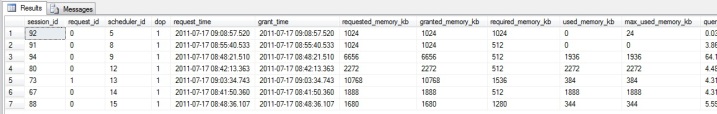

sys.dm_exec_query_memory_grants

I’ll admit this DMV doesn’t get used a lot. But when it is used, it’s very handy. The sys.dm_exec_query_memory_grants DMV returns information about current sessions that have been granted memory to execute or are waiting on memory grants. If I start seeing resource_semaphore waits in my database, this is where I go first. It will tell me how much memory each session has been granted, how much it requested, how much it would have requested given unlimited resources (useful for identifying very bad queries), the sql and plan handles (so you can go get the exact query) etc. Let’s take a look.

SELECT * from sys.dm_exec_query_memory_grants

sys.dm_db_index_usage_stats

The last DMV I’m going to cover is one I use quite regularly to determine what indexes are being used, how much they’re used, and when they’re used. The sys.dm_db_index_usage_stats view contains a record for every index that’s been used since the instance last started, along with counts for the various read and write operations that have been executed against it. Why is this helpful? If you monitor this view over time and find an index that has high user updates but no user seeks, scans or lookups, in most cases this index isn’t being used by the application and you might consider removing the index to eliminate the overhead of maintaining it. Now before you go out and start dropping indexes, there are some caveats to keep in mind. Those are outside the scope of this post, but google “SQL Server drop unused indexes” before you do anything else.

I know I said that would be the last one, but I feel I should mention a few DMVs that compliment sys.dm_db_index_usage_stats nicely. sys.dm_db_missing_index_groups, sys.dm_db_missing_index_group_stats, and sys.dm_db_missing_index_details views are commonly joined together to provide a list of indexes that SQL Server recommends based on usage. Like so:

SELECT

TableName=o.name, migs_Adv.index_advantage

, s.avg_user_impact

, s.avg_total_user_cost

, s.last_user_seek

,s.unique_compiles,

d.index_handle

,d.equality_columns, d.inequality_columns, d.included_columns, d.[statement]

from sys.dm_db_missing_index_group_stats s

inner join sys.dm_db_missing_index_groups g on g.index_group_handle=s.group_handle

inner join sys.dm_db_missing_index_details d on d.index_handle=g.index_handle

inner join sys.objects o (nolock) on o.object_id=d.object_id

inner join (select user_seeks * avg_total_user_cost * (avg_user_impact * 0.01) as index_advantage,

migs.* from sys.dm_db_missing_index_group_stats migs) as migs_adv on migs_adv.group_handle=g.index_group_handle

order by migs_adv.index_advantage desc, s.avg_user_impact desc

Keep in mind that all of these stats are reset at system startup. So you want to develop a procedure to retain this data over time. Personally, I have a script that captures the information once a week and I didn’t start using it until I had 4 months of data. Why so long? There are some processes that only run once a month. And there are a few that only run quarterly. If I based my actions on only a few weeks worth of statistics, I might end up dropping an index that’s critical to the performance of one of our quarterly reports. So be careful!

So there’s a taste of what DMVs I use. Which ones are your personal favorites?

SQL Server A to Z – Collation

One of the first decisions you need to make when installing SQL Server is to select a collation for the instance. Most of the time we simply choose the default and breeze on by. But do you really know all the effects that choice has on your database and the applications that run against it?

One of the first decisions you need to make when installing SQL Server is to select a collation for the instance. Most of the time we simply choose the default and breeze on by. But do you really know all the effects that choice has on your database and the applications that run against it?

What is the default?

By default, SQL Server chooses the collation that corresponds to the Windows system locale on your database server. You can see your current locale in the Regional and Language Options section of Control Panel. The default for U.S. servers is SQL_Latin1_General.

What does it affect?

The collation you choose will have 3 effects on your system.

- the code page – This is the mapping between the hex values that get stored in the database and the character they represent. It’s what makes 0x43 the letter C for the Latin alphabet.

- case sensitivity – Your collation determines whether C = c. This obviously has an impact on your applications and the data they’ll bring back during searches and joins. But an important thing to keep in mind is that it also impacts whether SELECT * FROM MyTab is the same as SELECT * FROM mytab. If you choose a case sensitive collation setting on installation, MyTab != mytab.

- sort order – The collation you select will also affect the sort order of query results. In a case sensitive dictionary sort (the kind used by Latin1_General_CS_AS), “cat” will come before “Cat”. However, in a binary sort order (Latin1_General_BIN), “Cat” comes before “cat”. In fact, “Toad” will come before “cat”, because the order is based on the bit value of the character.

Where is it set?

The collation of the server is selected at install time. This becomes the collation for all the system databases and any user databases that are created without specifying a collation. However, an individual user database can have a different collation from the SQL Server instance. And a column inside a table can have a different collation than the database or the instance. The database and column collation can be changed relatively easily. The only “easy” way to change the server collation is to rebuild the instance, so it’s worth taking a moment to think about your collation before installation.

What about Unicode?

SQL Server collations do not affect Unicode datatypes, nchar, nvarchar, etc. SQL Server collations are the ones that start with “SQL_”. These are provided to offer backward compatibility.

Cannot resolve collation conflict … for equal to operation

This, in my mind, is the biggest headache associated with collations. If you try to join 2 columns of incompatible collations, a case sensitive with a case insensitive for example, you’ll get a variation on the above error. SQL Server doesn’t know which collation’s rules to follow. To get around this, you’ll need to include the COLLATE clause in your query, like this:

USE AdventureWorks; GO SELECT * FROM MyTabCI ci INNER JOIN MyTabCS cs ON ci.ColA COLLATE Latin1_General_CS_AS = cs.ColA

This will tell SQL Server which collation to use for the join.

That’s about it. The important thing to remember with collations is that a little thought up front can save you a lot of hassle down the road.

TSQL Tuesday #20: T-SQL Best Practices

Once a month, on the 2nd Tuesday, the SQL Server online community has a virtual party of sorts called T-SQL Tuesday. T-SQL Tuesday was started by Adam Mechanic (blog|twitter) back in 2009 and has been going strong ever since. This month, I decided to crash the party with a submission of my own. The topic-du-jour is T-SQL Best Practices, hosted by Amit Banerjee (blog|twitter).

Once a month, on the 2nd Tuesday, the SQL Server online community has a virtual party of sorts called T-SQL Tuesday. T-SQL Tuesday was started by Adam Mechanic (blog|twitter) back in 2009 and has been going strong ever since. This month, I decided to crash the party with a submission of my own. The topic-du-jour is T-SQL Best Practices, hosted by Amit Banerjee (blog|twitter).

I’ll keep this short and sweet.

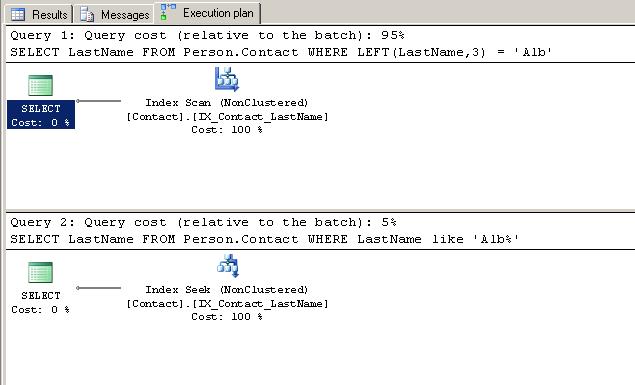

Wherever possible don’t use functions in the where clause. Please. Pretty please? Why? because it affects the way SQL Server optimizes the query and in most cases prevents proper usage of indexes. I’ll show you what I mean.

USE AdventureWorks; GO CREATE INDEX IX_Contact_LastName ON Person.Contact (LastName); GO -- sad dba SELECT LastName FROM Person.Contact WHERE LEFT(LastName,3) = 'Alb' GO -- happy dba SELECT LastName FROM Person.Contact WHERE LastName like 'Alb%' GO

Two perfectly legitimate queries, returning the same data. But take a look at the execution plans for those queries.

Even though, logically speaking, these queries are doing the same thing, the LEFT function on the first query is preventing the optimizer from using an index seek operation. Instead, it’s scanning the entire index (much like a full table scan). This isn’t a huge problem on such a small example, but believe me, it becomes a ginormous problem on larger tables and more complex queries.

So please, whenever possible, avoid this pitfall. Your DBA will thank you.

SQL Server A to Z – Backups

One of the most important, if not the most important tasks of the DBA is backing up your database. The database backup is the most basic disaster recovery plan you can have in place. It’s certainly the easiest to implement. Today I’m going to go over the basics of backups, including the different types of backups and how they work.

One of the most important, if not the most important tasks of the DBA is backing up your database. The database backup is the most basic disaster recovery plan you can have in place. It’s certainly the easiest to implement. Today I’m going to go over the basics of backups, including the different types of backups and how they work.

Full Backup

A full backup of a database includes all data pages in the database and just enough of the transaction log to be able to recover up to the end time of the backup. Curious as to all the internal steps involved in a full backup? Turn on trace flags 3004 and 3605 using DBCC TRACEON (3004, 3605, -1) and perform a backup a backup. Then take a look at your errorlog. Just don’t forget to turn off the trace flags when you’re done, since they can cause some performance degradation in your backups.

Differential Backup

A differential backup is an incremental backup of all changed data since the last full backup. The last full backup. Not the last differential backup. This is an important distinction to keep in mind because a lot of new DBAs (especially former Oracle DBAs) think that, in the event of a restore, they need to restore the full backup and then all of the differential backups taken after. And that’s simply not the case. You only need to restore the latest differential.

So how do differentials work? SQL Server uses something called a Differential Change Map (DCM) page to track changes to extents. The 6th page in every data file is a DCM page, and it tracks about 4GB worth of data. So, for every 4GB of data in your database, there’s a corresponding DCM keeping watch. Let’s take a quick look.

-- Start with a full backup

BACKUP DATABASE [AdventureWorks] TO DISK = N'D:\SQL2008\Backup\AdventureWorks.bak' WITH NOFORMAT, INIT, NAME = N'AdventureWorks-Full Database Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10

GO

-- Look at the DCM

DBCC TRACEON (3604)

DBCC PAGE('adventureworks',1,6,3)

GO

Scroll down through the results until you get to the DIFF MAP section. Your results should look something like this:

DIFF_MAP: Extent Alloc Status @0x000000000F51A0C2

(1:0) – (1:24) = CHANGED

(1:32) – (1:21752) = NOT CHANGED

Now let’s update some data and take another look.

-- update some data

update Production.TransactionHistory

set Quantity = 100

GO

-- check out the differential map now

DBCC PAGE('adventureworks',1,6,3)

GO

Again, scroll through the results to the DIFF MAP section. Now it probably looks more like this:

DIFF_MAP: Extent Alloc Status @0x000000000F51A0C2

(1:0) – (1:24) = CHANGED

(1:32) – (1:152) = NOT CHANGED

(1:160) – = CHANGED

(1:168) – (1:224) = NOT CHANGED

(1:232) – = CHANGED

(1:240) – (1:20096) = NOT CHANGED

(1:20104) – (1:20160) = CHANGED

(1:20168) – (1:20224) = NOT CHANGED

(1:20232) – (1:20288) = CHANGED

…

So by reading 1 page for every 4GB of data, SQL Server knows exactly what extents have been changed and therefore knows what extents need to be backed up. And that’s what makes differential backups so fast. If we were to perform a differential backup now and check the DCM page, we’d see no difference. However, if we performed a full backup, the DCM page would revert back to its original state.

Note that you can take a special type of full backup, called a Copy-Only backup that will not reset the DCM pages. Use this if you need to take a one-off backup, say to refresh a Dev environment, without interrupting your normal backup plan.

File Backup

Individual database files can be backed up and restored using full or differential file backups. This is helpful if only one data file is corrupted or lost and needs to be restored. File backups can also make backing up very large databases more manageable. But you really need to think through your backup/restore strategy if you’re using file backups. Miss one file and you could render your database unrecoverable. In my humble opinion, they’re generally not worth the administrative nightmare.

Partial backup

SQL Server 2005 introduced partial backups. Like file backups, partial backups are helpful in very large databases, however they don’t entail quite so much administration. A full or differential partial backup will back up all read/write filegroups in the database and, optionally, any read-only filegroups you specify.

Transaction Log Backup

If your database is in Full or Bulk-Logged recovery mode, you need to take transaction log backups. A transaction log backup backs up the active portion of the transaction log, including all transactions since the last log backup. If you’re using Bulk-Logged recovery, the log backup also includes the actual data pages for any minimally logged transactions. Once a log backup is performed, that portion of the transaction log is freed up for reuse. This prevents those pesky transaction log full (9002) errors.

SQL Server A to Z – Audit

In previous versions of SQL Server, there were a number of methods for auditing activity within an instance or database. You could use DDL triggers to track any DDL changes, login auditing to write login successes or failures to the error log, Profiler tracing, and even C2/Common Criteria Compliance Auditing. But each of these methods had its shortcomings. It was either too limited in scope or granularity or just too hard to administer.

In previous versions of SQL Server, there were a number of methods for auditing activity within an instance or database. You could use DDL triggers to track any DDL changes, login auditing to write login successes or failures to the error log, Profiler tracing, and even C2/Common Criteria Compliance Auditing. But each of these methods had its shortcomings. It was either too limited in scope or granularity or just too hard to administer.

Introducing SQL Server Audit

With SQL Server 2008 we’re introduced to a more comprehensive solution called SQL Server Audit. With SQL Server Audit you can track events at the instance or database level. You can audit login failures at the server level, for example, or track object changes within a database, or even to audit T-SQL statements being issued against a specific object.

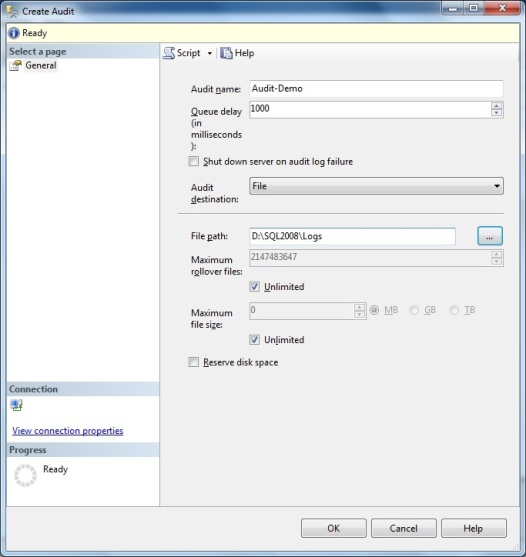

Create the Audit object

To get started using SQL Server Audit, we need to create an Audit Object. This can be done through SSMS or T-SQL. Using SSMS, right-click the Audit folder under Security and select New Audit. Give the new audit a name and specify where you want the output to go. You can choose to write to a file, the Application Log, or the Security Log. Personally, I like to output to a separate file. If you choose File, specify the path where you want the output file stored and, optionally the max size for the file. Click OK.

Create the Audit Specification

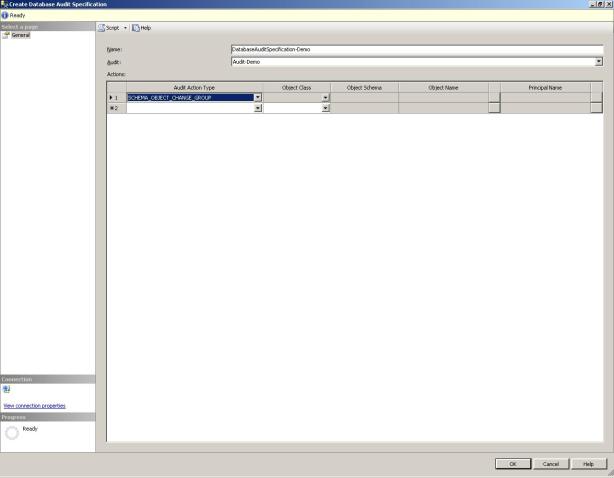

Now that we’ve created the audit, we need to tell it what to track. To do this, we create an audit specification. Audit specifications can be created at the server level or at the database level, each having its own set of actions. For the sake of this demonstration, let’s say I want to audit any DDL changes made to objects in the AdventureWorks database. Within the AdventureWorks database in SSMS, right-click Database Audit Specifications under Security, and select New Database Audit Specification… Give your spec a name and select the audit we just created. Under Actions, for the Audit Action Type, choose SCHEMA_OBJECT_CHANGE_GROUP and click OK.

Enable the Audit and the Audit Specifications

You’ll notice that both the Audit and the Audit Specification are created disabled. Right-click on each and enable them. Now we’re ready to test it.

USE AdventureWorks GO CREATE table AuditDemo ( Column1 int); GO

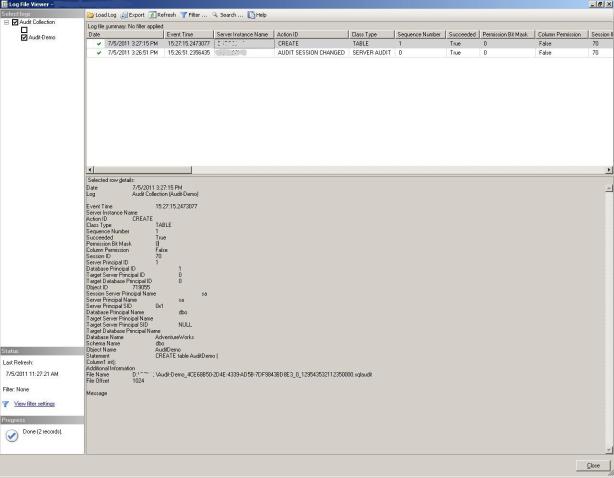

To view the audit log, right-click on the Audit object and select View Audit Logs.

And there you have it. Easier than Profiler, less invasive than triggers, and more specific than C2 auditing. I was impressed by the sheer number of events you can audit and how easy this was to implement. What do you think? Do you plan on using SQL Server Audit in your environment?

Scripting out a database – revisited

Doh! A while back I posted a Powershell script that would script out a SQL Server database. Well, a reader pointed out to me yesterday that it wasn’t quite working for him. And wouldn’t you know, it wasn’t working for me either. The problem was that when I created the script I had a specific database in mind. And this database resided on a SQL 2005/Powershell 1.0 server. My script worked like a champ there. On a SQL 2008/Powershell 2.0 server, well, not so much. So I spent some time yesterday making some fixes and I think I’ve got it this time (for either system).

Doh! A while back I posted a Powershell script that would script out a SQL Server database. Well, a reader pointed out to me yesterday that it wasn’t quite working for him. And wouldn’t you know, it wasn’t working for me either. The problem was that when I created the script I had a specific database in mind. And this database resided on a SQL 2005/Powershell 1.0 server. My script worked like a champ there. On a SQL 2008/Powershell 2.0 server, well, not so much. So I spent some time yesterday making some fixes and I think I’ve got it this time (for either system).

Many thanks to William for the heads up.

The new code:

# SQLExportDBSchema.ps1

# Usage -

# From PS: .\SQLExportDBSchema.ps1 "[Drive letter]" "[Server]" "[Database]"

# From CMD: powershell -command "& 'D:\powershell\SQLExportDBSchema.ps1' "[Drive letter]" "[Server]" "[Database]" "

param(

[string]$drive=$null,

[string]$srv=$null,

[string]$database=$null

)

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO') | out-null

$filepath = $drive+":\"+$srv+"_backup\"+$database+"\"

$s = new-object ('Microsoft.SqlServer.Management.Smo.Server') $srv

$db = New-Object ("Microsoft.SqlServer.Management.SMO.Database")

$db=$s.Databases[$database]

$scrp = new-object ('Microsoft.SqlServer.Management.Smo.Scripter') ($s)

$scrp.Options.AppendToFile = $False

$scrp.Options.ClusteredIndexes = $True

$scrp.Options.DriAll = $True

$scrp.Options.ScriptDrops = $False

$scrp.Options.IncludeHeaders = $True

$scrp.Options.IncludeIfNotExists = $True

$scrp.Options.ToFileOnly = $True

$scrp.Options.Indexes = $True

$scrp.Options.Triggers = $True

$scrp.Options.Permissions = $True

#CREATE DATABASE

$scrp.Options.AppendToFile = $False

$outfile =$filepath+$database+"_create.sql"

$scrp.Options.FileName = $outfile

$scrp.Script($db)

#SECURITY

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_security.sql"

$scrp.Options.FileName = $outfile

$scrp.Options.IncludeDatabaseRoleMemberships = $true

Foreach ($ro in $db.Roles)

{

$scrp.Script($ro)

$scrp.Options.AppendToFile = $True

}

$scrp.Options.AppendToFile = $True

Foreach ($appro in $db.ApplicationRoles)

{

$scrp.Script($appro)

}

Foreach ($us in $db.Users)

{

$scrp.Script($us)

}

Foreach ($sch in $db.Schemas)

{

$scrp.Script($sch)

}

$scrp.Options.WithDependencies = $True

#PARTITIONING

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_partitioning.sql"

$scrp.Options.FileName = $outfile

Foreach ($part in $db.PartitionFunctions)

{

$scrp.Script($part)

$scrp.Options.AppendToFile = $True

}

$scrp.Options.AppendToFile = $True

Foreach ($psch in $db.PartitionSchemes)

{

$scrp.Script($psch)

}

#TABLES

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_tables.sql"

$scrp.Options.FileName = $outfile

Foreach ($tab in $db.Tables)

{

$scrp.Script($tab)

$scrp.Options.AppendToFile = $True

}

#DDLTRIGGERS

$scrp.Options.WithDependencies = $False

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_triggers.sql"

$scrp.Options.FileName = $outfile

Foreach ($trig in $db.Triggers)

{

$scrp.Script($trig)

$scrp.Options.AppendToFile = $True

}

$scrp.Options.WithDependencies = $True

#VIEWS

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_views.sql"

$scrp.Options.FileName = $outfile

$views = $db.Views | where {$_.IsSystemObject -eq $false}

$scrp.Script($views)

#FUNCTIONS

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_functions.sql"

$scrp.Options.FileName = $outfile

$functions = $db.UserDefinedFunctions | where {$_.IsSystemObject -eq $false}

$scrp.Script($functions)

#STORED PROCS

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_storedProcs.sql"

$scrp.Options.FileName = $outfile

$storedprocs = $db.StoredProcedures | where {$_.IsSystemObject -eq $false}

$scrp.Script($storedprocs)

#SYNONYMS

$scrp.Options.AppendToFile = $False

$outfile = $filepath+$database+"_synonyms.sql"

$scrp.Options.FileName = $outfile

Foreach ($syn in $db.Synonyms)

{

$scrp.Script($syn)

$scrp.Options.AppendToFile = $True

}

Goals

Most people set goals in January. It’s a brand new calendar year full of possibilities. They join gyms. They quit smoking. I’ve never been one for New Year’s resolutions, personally. And I don’t think goal-setting should be confined to January. For me, in my professional life, July makes more sense. I’ve had my annual review at work, I’ve taken stock of the past year, and it’s time to start focusing on what I want the next 12 months to look like.

Most people set goals in January. It’s a brand new calendar year full of possibilities. They join gyms. They quit smoking. I’ve never been one for New Year’s resolutions, personally. And I don’t think goal-setting should be confined to January. For me, in my professional life, July makes more sense. I’ve had my annual review at work, I’ve taken stock of the past year, and it’s time to start focusing on what I want the next 12 months to look like.

So, over the past couple of weeks, I’ve been thinking a lot about my goals for the coming year. I wanted goals that would challenge me. Even if I might not necessarily achieve them, they’d push me to work harder and get outside my comfort zone. I debated whether to publish them here. What if I didn’t reach them? But in the end I decided the added accountability of making them public would outweigh any embarrassment I might feel in the end. So here they are, in no particular order.

Certification

I actually debated whether to make this a goal or not, mainly because I’ve wondered about the value of certifications for an experienced DBA. However, in the end, I figured at the very least it would help me round out my knowledge base and get more exposure to those facets of SQL Server I don’t work with regularly. So by next July I’d like to have my MCITP Database Administrator. In order to accomplish this, I plan to spend 2 hours a week studying for the exams. Having this blog is also a great help in solidifying my knowledge. If I’m gonna write about it, I better know about it.

Present at a SQL Saturday

Given the fact that I’ve never presented anywhere, this one is a bit lofty, I admit. But hey, I said I wanted a challenge, right? So far I’ve gotten the ball rolling by volunteering to present at a developer’s forum where I work. And I’ve started bouncing around ideas for a presentation at our local user group. I think my biggest hurdle here is my thinking that I need to present on something no one has done before, that it needs to be super-technical, and that I need to be an expert in the subject. When, in fact, probably none of these is true. So I need to start looking more at topics that interest me, perhaps even how I used feature X to solve problem Y.

Increase this blog’s readership

I started this blog back in February. I averaged 1 hit per day that month. Last month I averaged 14. Don’t get me wrong, I’m glad anyone is reading. But, obviously, I’d like more people to be reading. This is my main outlet, after all. So, I plan on doing a few things to help get me there. I want to blog more regularly, at least twice a week. I was doing pretty well with this until the past few weeks when life and work just got away from me. I need to remember that not every post has to be a thesis on SQL Server, it’s ok to have quick, concise posts mixed in. I also need to take a look at how this blog is reflecting my personality and what I can do to make it more… well… me. So hopefully you’ll be seeing some changes around here. Do I have a particular number in mind for this goal? Not really. I don’t think the number itself is important, it’s the work I put into it that matters.

So there they are, my goals for 2011-2012. Happy new year!